Sample-Efficient Covariance Matrix Adaptation Evolutional Strategy via Simulated Rollouts in Neural Networks

Abstract

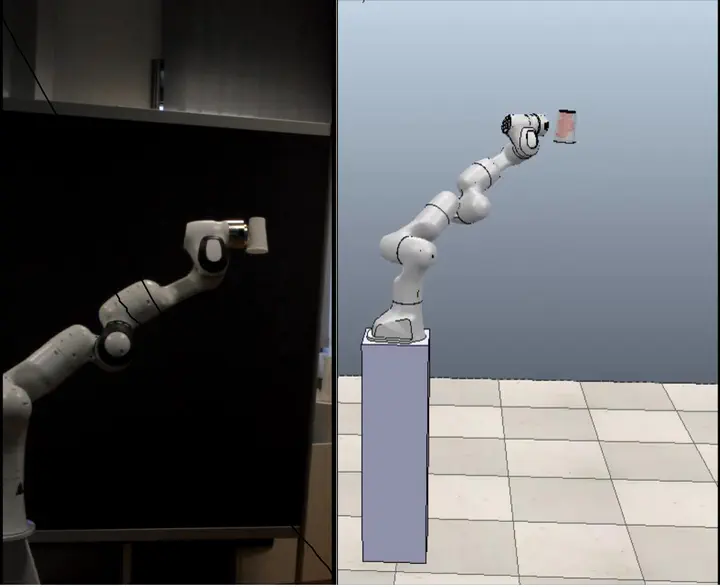

Gradient-free reinforcement learning algorithms often fail to scale to high dimensions and require a large number of rollouts. In this paper, we propose learning a predictor model that allows simulated rollouts in a rank-based black-box optimizer Covariance Matrix Adaptation Evolutional Strategy (CMA-ES) to achieve higher sample-efficiency. We validated the performance of our new approach on different benchmark functions where our algorithm shows a faster convergence compared to the standard CMA-ES. As a next step, we will evaluate our new algorithm in a robot cup flipping task

Type

Publication

In 2nd International Conference on Advances in Signal Processing and Artificial Intelligence